A quick 3D prototype I built to demonstrate how AR/MR users might use their iPhone as an asset browser and a 6DoF controller for object placement. (Built using After Effects and Cinema 4D)

Description

What might Keynote (Apple's presentation software) look like if we reimagine it for a Mixed Reality HMD instead of the traditional monitor, keyboard, mouse? What kind of changes and value adds might we see? I spent a few days exploring this question and designed a set of high-level UX/UI components that a XR version of Keynote might need.

Included Deliverables: UI component designs, user flows, VR wireframe sketches, 3D motion concept renders, body-storming prototypes, drawn concept sketches.

Exploration Scope

To prioritize my time for the most novel and interesting aspects of Keynote XR, I abbreviated the full Keynote user journey into 3 key milestones: setup, add and present. These created loose structure and a framework for my explorations.

Scenario

Gloria Hernandez, Professor of Paleontology

Gloria, a paleontology instructor, is planning her lecture about late Cretaceous dinosaurs. To teach the lecture, she's using her Apple head mounted display (HMD), Keynote XR, and the lecture hall's traditional AV setup.

Many of her students have Apple's HMD, but most still use a laptop or smartphone to follow along during class. Some students also attend class remotely from home.

UI Framework

Keynote is relied upon for versatility, meaning it needs to work for everything from small classrooms to massive corporate events. UI for Keynote needs to be flexible enough for wild-card scenarios while easy for users to setup and understand. In our scenario, Gloria is using Apple's new HMD headset. She is hoping these headsets can help her students better absorb the content, through 3D visuals that fill the lecture hall.

User has added a screen component, which is anchored in relation to the stage (built in VR using Tvori)

Screen

The Screen is a traditional, windowed presentation canvas • In other words, a flat home for flat things. This is what an auditorium projector will display and be seen by people with AND without MR devices. While HMD's will be the clear superior way to enjoy a presentation, we don't want folks without an HMD to feel like they've attended a silent disco without a set of headphones.

Remote audiences will see the Screen as a floating AR window, amongst any Spatial Content the presenter might have placed on the Stage.

Volumetric holograms won't replace the need for good 'ol text, photos and videos right away. So this is a necessary accommodation to keep Keynote versatile.

User starting with an empty stage, (built in VR using Tvori)

Stage

Assemble 3D/spatial content that can fill an auditorium, from the comfort of your desk • The stage is a bounding box that anchors all other components. When a user builds their project, they'll place all assets (2D or 3D) on and in relation to the stage. Before showtime, the presenter scales and positions this virtual stage to fit their physical space and all assets scale proportionally with it.

Audiences who are in the same space as the presenter see the stage at whichever scale/position the presenter set.

Remote audiences can scale the stage up for a larger than life experience or scale it down and place it off to the side.

Content Creation

Now that Gloria has set up the stage, she is ready to start adding spatial content to her presentation. In the future, Keynote XR will be able to

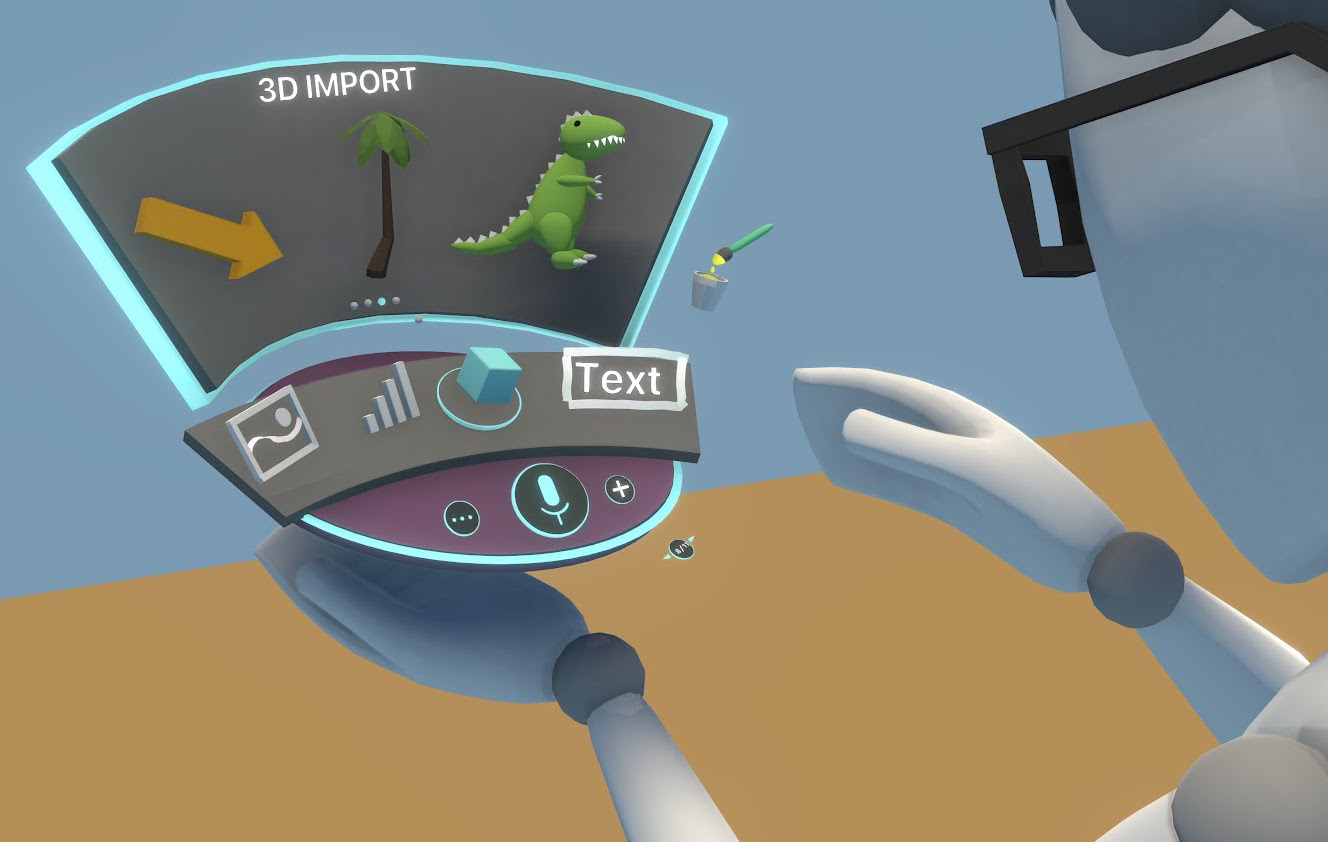

A prototype of a hand-locked Palette. (Animated in VR using Tvori)

Palette

All assets in your Keynote start life on the palette. This component allows you to browse/add 3D objects, add new slides, enter text or add photos.

Pallet also comes in several flavors, matching whichever interaction mode you feel like using.

(More on hand, iPhone, and voice inputs below)

Spatial Content

This is where the Mixed Reality really shines. Want graphics that audiences can summon and rotate to get a closer look? You get 'em. 3D animated assets from SketchFab that you can use like the clipart of yesteryear? Absolutely! Whales flying overhead through the auditorium? No. Magic Leap will sue you... 💩

This content will originate in the palette. It lives within the bounds of the Stage, and can be interacted with by audiences (both remote and co-located).

Avatar

This component is entirely for audiences in a different physical space than the presenter. When building a Keynote, the presenter will be able to choose what spot on the Stage their avatar will appear. The presenter's head mounted display will be able to use head movement, hand tracking, and voice to drive the body and face of a 3D avatar.

For presenters who are not wearing an HMD, the iPhone's or Mac's front facing camera could achieve similar MoCap or volumetric video instead of a puppeted Avatar.

Putting it all together

Multimodal Interactions

With Apple's rich ecosystem of mobile and wearable devices, new interaction modalities will likely emerge that are better suited for spatial computing tasks than traditional input devices. Keynote XR will have multiples ways to add and edit content. Just like there is a tool for every job, there is an input for every occasion.

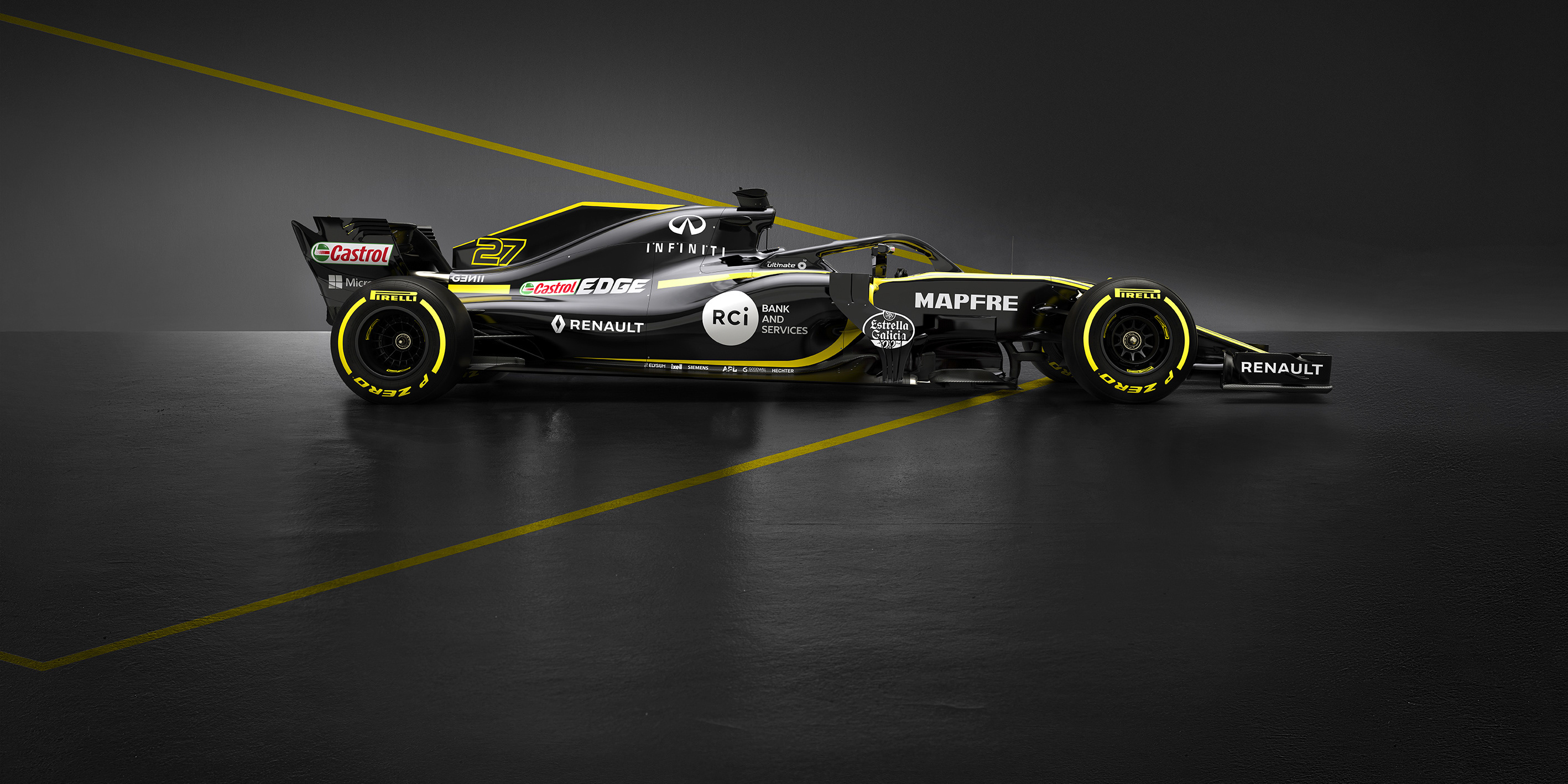

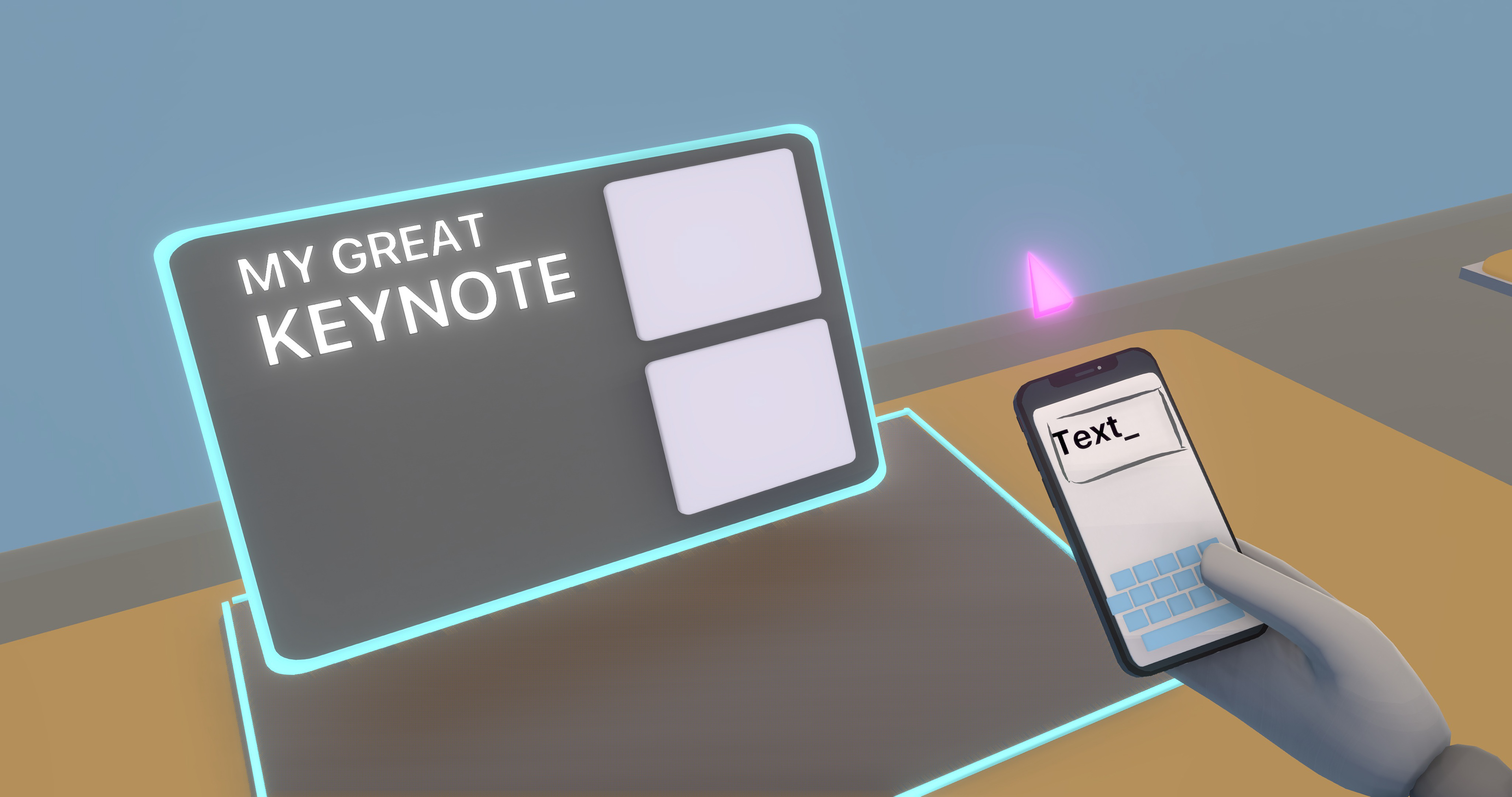

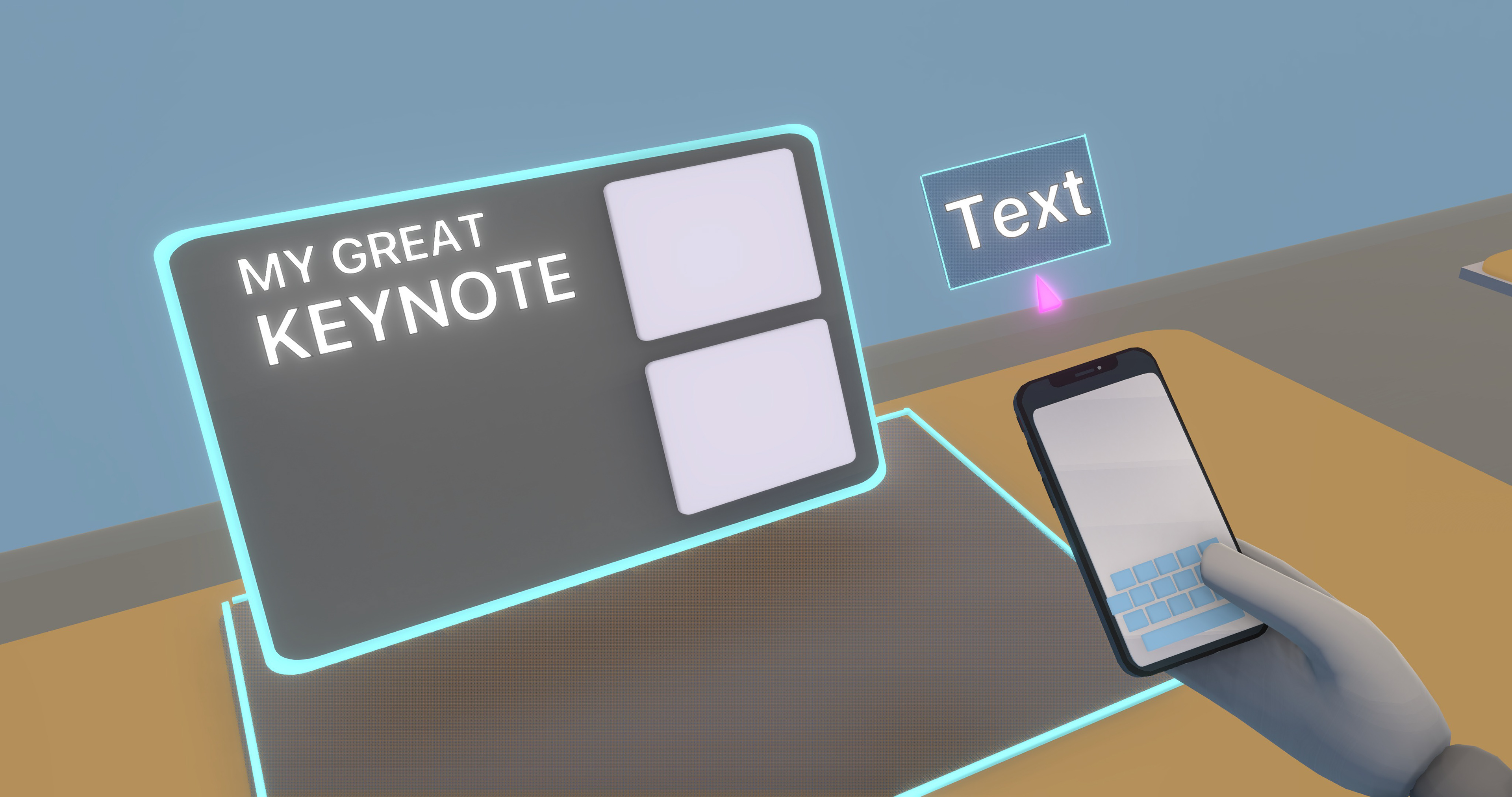

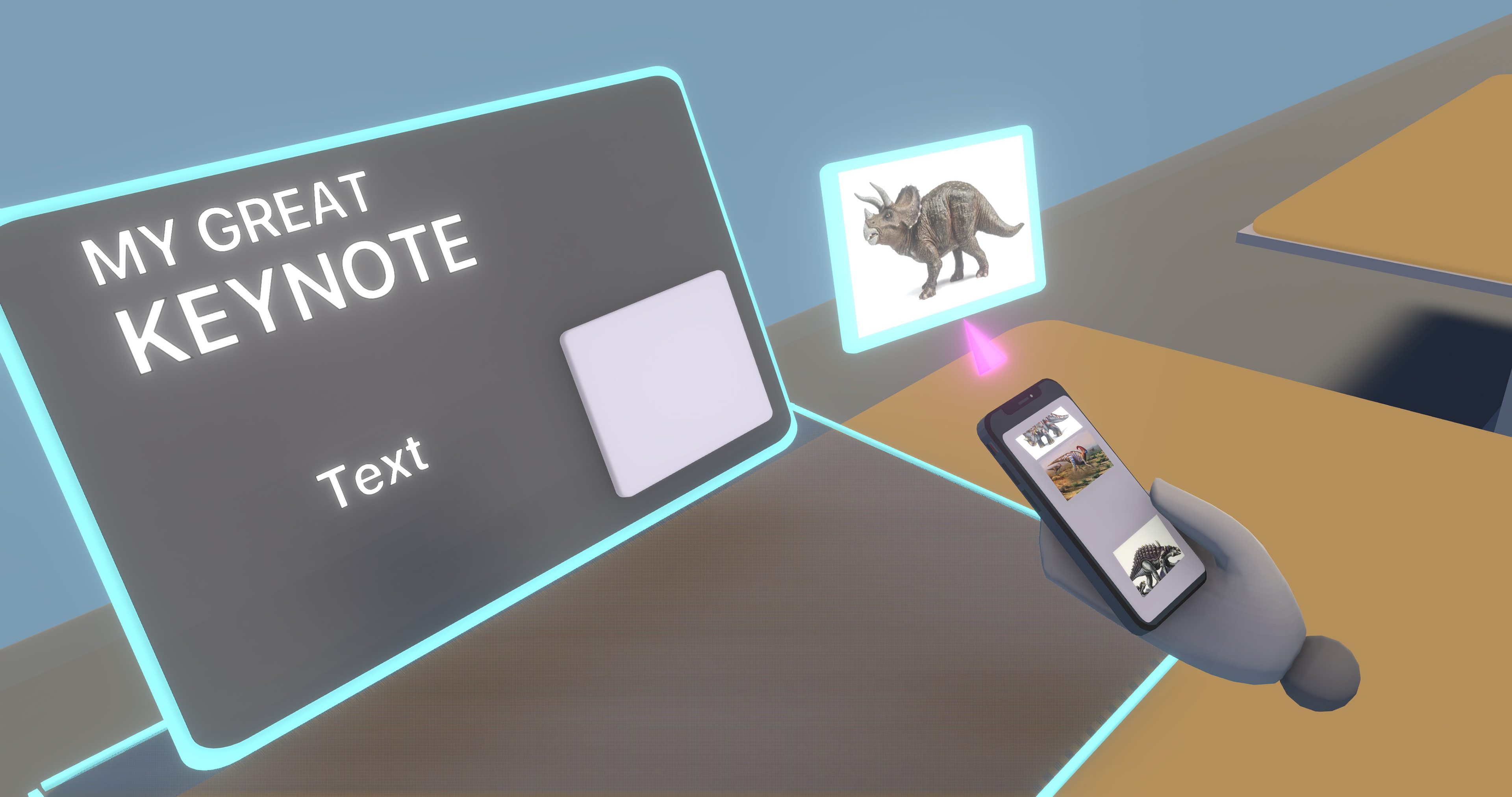

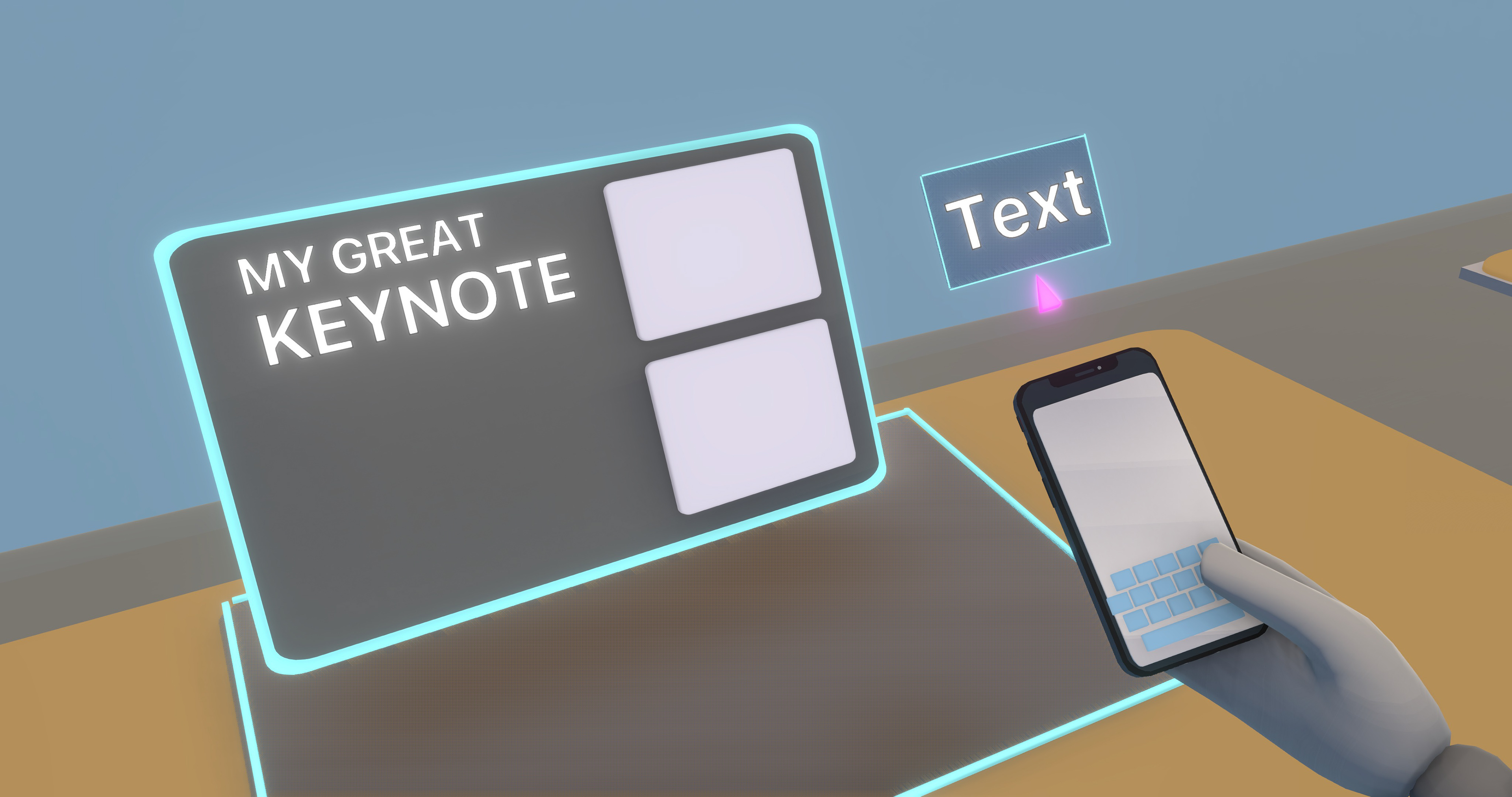

Phone

Users can browse/import 3D assets, photos/video and input text on a smartphone. When the user taps their selection, an AR version pops out and anchors to the smartphone as a spatially placeable object. 6DoF tracking between the HMD and smartphone gives users a physical tool to place, rotate, scale, and interact with the AR asset. All features of the palette are available via the iPhone.

Pros

• Phone keyboard offers familiar text input

• Using screen for near-field interactions may reduce eye strain from focal plain conflict

• Haptic feedback

• Physical device in hand gives better social queue to onlookers than hand gestures alone

Here the user has selected a 3D model on the screen of their iPhone and places it spatially within the Stage of a 3D Keynote slide. With Apple's rich ecosystem of mobile and wearable devices, new interaction modalities will likely emerge that are better suited for spatial computing tasks than traditional input devices.

Hands

Using just their hands, users have everything they need to build a full MR Keynote. Open your non-dominant hand upward and the Palette springs open. From here, you can browse and grab content to fill the Stage. Don't want to keep your hand up constantly? Dock the palette anywhere within reach for better comfort.

Pros

• Natural, device-free interaction

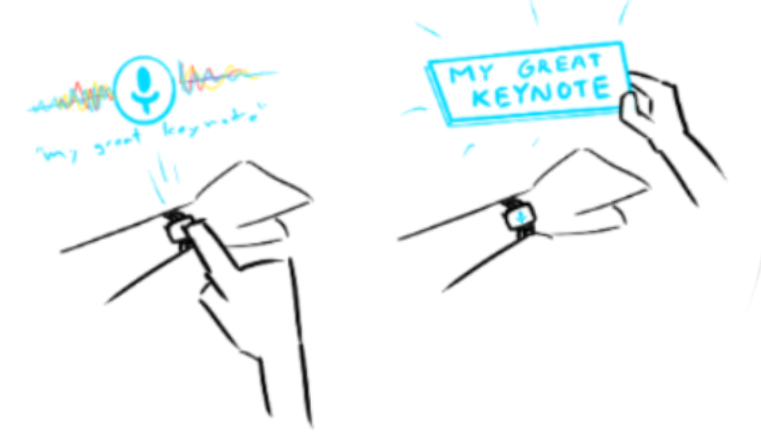

Voice

Users can import assets, make changes, and input text quickly by using their voice. For example, saying "Hey Siri, make a text box that says My Great Keynote" would produce a floating AR asset that can be placed on the Stage.

Voice can also be used via an iPhone, or an Apple Watch.

Pros

• Hands free

• Fast text input

Device Interoperability

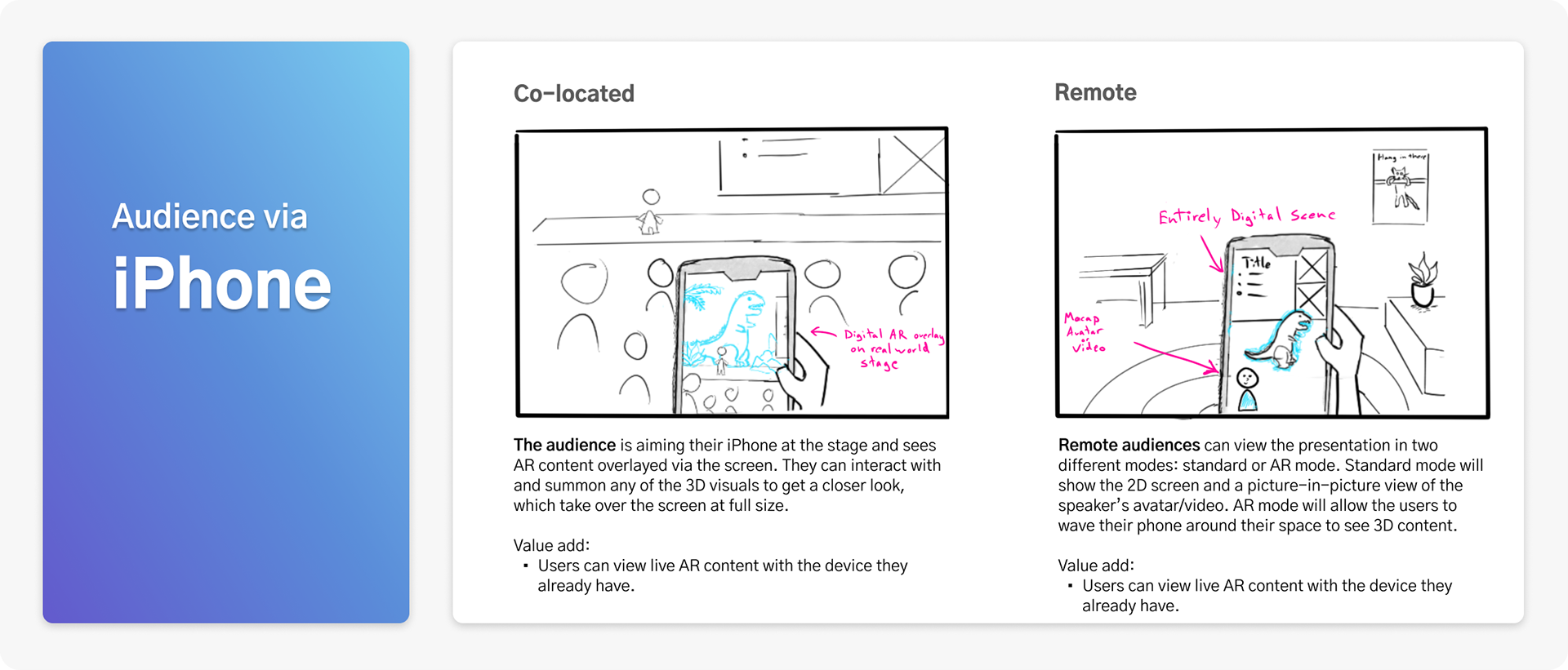

HMD's will eventually be as prevalent as today's smartphones. But it ain't gonna happen over night. In the scenario with Gloria, many of her students could be at home using a computer to watch the lecture.

It is crucial for us to account for all of the asymmetric combinations that our users will encounter. This will enable us to concept how Keynote XR can be valuable to people who use Apple's entire family of products.

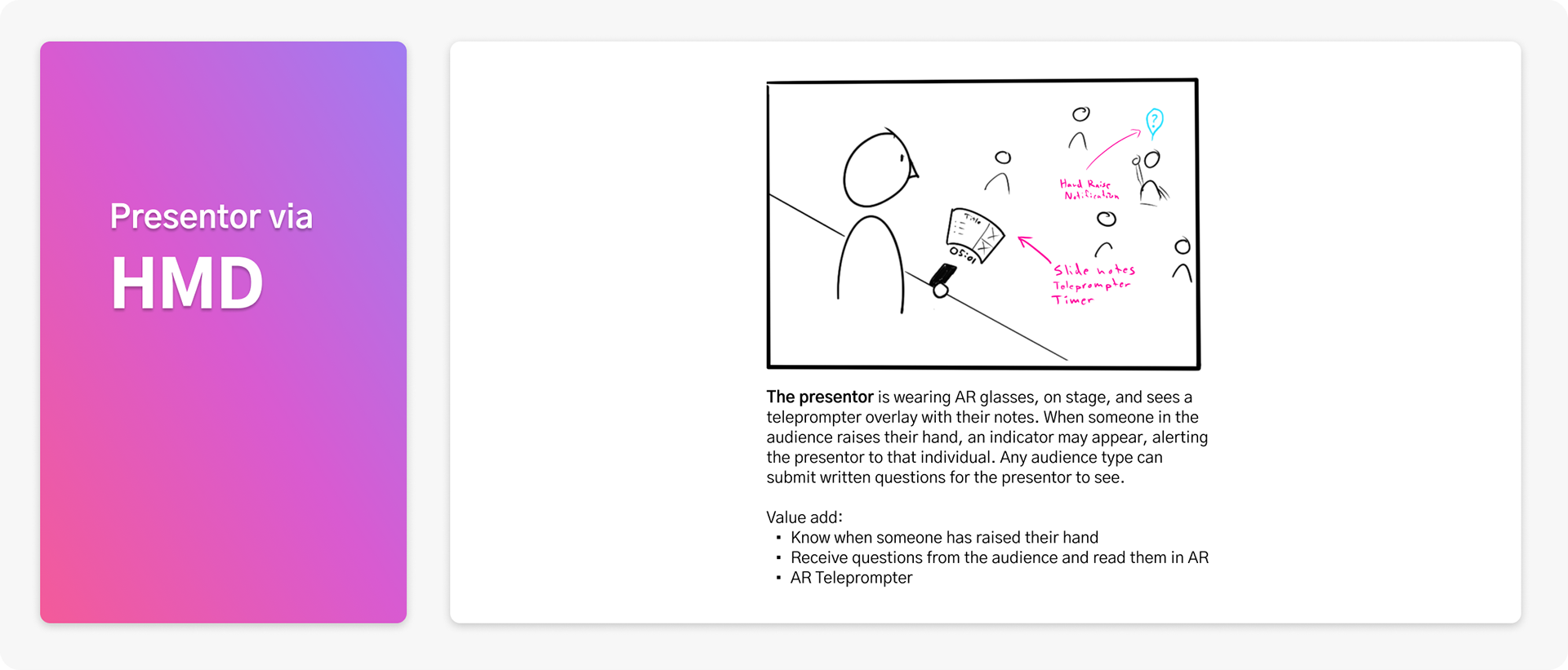

Additional Features & Value Props

Eye Contact Trainer

Stage Fright Audience Blocker/Simulator

Transport your audience and set the right mood by adding 3D environments as backdrops for your Keynote.

Audiences can interact with any 3D content in a Keynote by summoning it their kinesphere for a hands-on view.

If someone raises their hand with a question, your HMD will paint an indicator above their head and give you a subtle notification

Never go over the allotted time, with an AR timer and notifications

An AR teleprompter will help you stay on script

No matter where or how the audience watches your Keynote, they can see a visual representation of you through avatar MoCap or volumetric video capture.